The paper outlines an approach that works despite background noise in the original recording and can work even if the speaker is emotional. Next to previous techniques, Microsoft’s should be able to produce results that are less flat and more accurate. To do so, the team proposes “an explicit audio representation learning framework that disentangles audio sequences into various factors such as phonetic content, emotional tone, background noise, and others”.

A More Versatile Solution

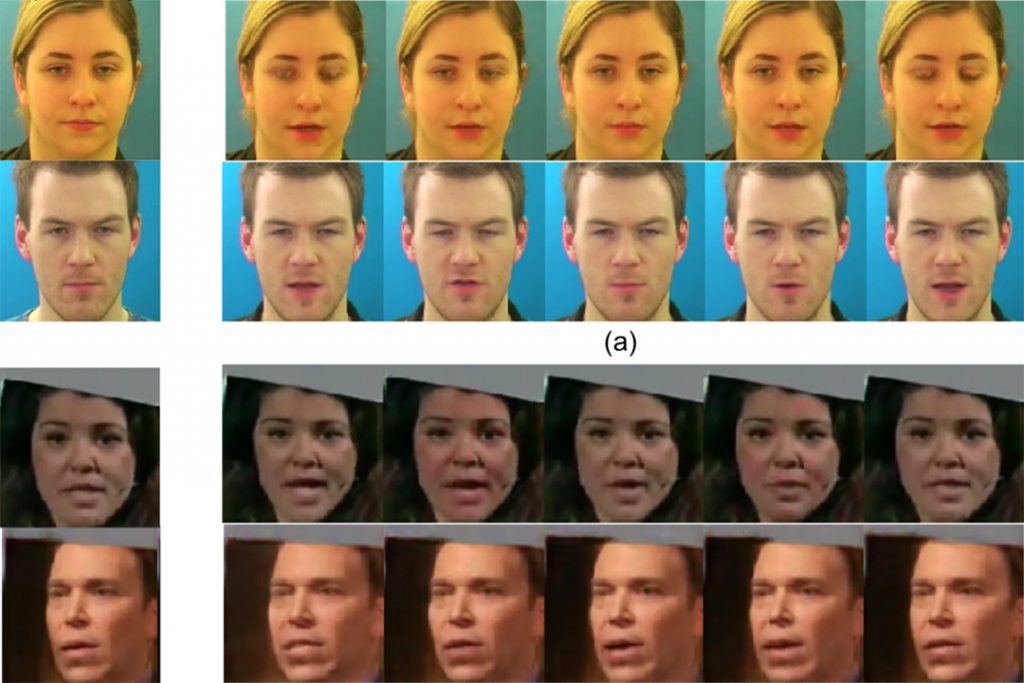

To prove its efficacy in all situations, the researchers replaced previous efforts with their framework. Importantly, publishers Gaurav Mittal and Baoyuan Wang say the approach will be important for real-world applications. The AI was trained on three data sets, 1,000 CREMA-D recordings, 7,442 LRS3 clips, and 100,000 sentences from TED talks. They comprised of speakers from ethnically diverse backgrounds to ensure accuracy across several accents and cultures. The paper did not include a full video but did include frames from several attempts. While they may not pass as human when applied to a high-quality, face-on source, they may appear accurate enough without close scrutiny. This, of course, raises questions about the use of the tech in the future. With the rise of deep fakes and fake news, could such techniques be used in the future to create misleading content? Microsoft’s general philosophy is to restrict the use of some AI tech to non-nefarious means, but such techniques can also create a power imbalance. It’s a difficult line to draw, but we may see these methods used in Azure solutions in the future.