On Windows and other platforms, CPU utilization is the literally an overview of what the central processing unit uses. It denotes the sum of all work being done by the CPU. Some tasks take more CPU time than others, but platforms like Windows use CPU utilization as an estimate for system performance. The problem is, CPU utilization is inaccurate because there is a discrepancy between how the Task Manager represents cores and what’s is actually available. That’s because Windows measures all cores like they are physical, when some may be logical. Gregg says the representation in the Task Manager is wrong. If the manager shows 80% CPU load, users will be forgiven for thinking there is 20% remaining. However, Gregg says many times the true representation would show a stalled CPU waiting for work to do.

This stalled process can be seen in action anytime you do something tasking enough to create an obvious performance slowdown. Gregg suggests memory access can slow down a machine. Interestingly, Gregg uses a case example to show two servers running the same workload but performing differently. The cause seems to be Meltdown and Spectre patches that were well-reported to cause performance slowdowns.

PTI Performance Loss

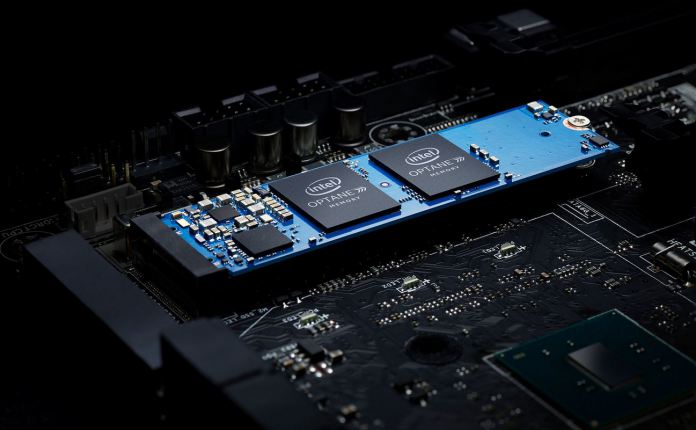

Intel already uses PTI on newer processors. It places the kernel in a dedicated address space, protecting it from being always in process, but also making it always available. While it is possible to compensate for this in newer chips optimized for PTI, in existing processors it is predicted PTI will have a major impact on performance. The Meltdown and Spectre CPU flaw affects hundreds of millions of devices. when a command is executed on a PC, the CPU gives system control to the kernel. The kernel then stays locked into the virtual memory address of all processes. This happens in order to make systems more efficient and deliver better performance.